I am a graduate of the School of Computer Science and Engineering (SCSE) at Beihang University (北京航空航天大学 计算机学院). My research is centered on Large Language Models (LLMs) and Multimodal Large Language Models (MLLMs). I am currently an intern at ByteDance Seed, where my focus is on enhancing the coding capabilities of LLMs. I am also passionate about exploring Data Curation methodologies and the potential of Small Models.

I am a passionate advocate for open-source and take pride in contributing to the community with a variety of projects, models, and datasets on GitHub and Hugging Face. It is also my pleasure to give back to the academic world by serving as a reviewer for international conferences and workshops.

I find great joy in connecting with others and sharing knowledge through my technical blog. If my work resonates with you or if you have any ideas to discuss, I would be delighted to hear from you. You can find my email on the left.

I am a native speaker of Chinese, with professional working proficiency in English and German.

🔥 News

- [2025.10] 🎉 One paper accepted to IJCNLP-AACL 2025.

- [2025.09] 🎉 One paper accepted to EMNLP 2025 Workshops.

- [2025.08] 🎉 One paper accepted to EMNLP 2025.

- [2025.07] 🎉 Two papers accepted to ICCV 2025 Workshops.

- [2025.06] 🎉 Two papers accepted to ICML 2025 Workshops.

- [2025.04] 🏅 Received 3 Great Review distinctions in ACL Rolling Review (Feb. cycle).

- [2024.12] 🎉 One paper accepted to AAAI 2025.

📝 Publications

Authors marked with * contributed equally (co-first authors).

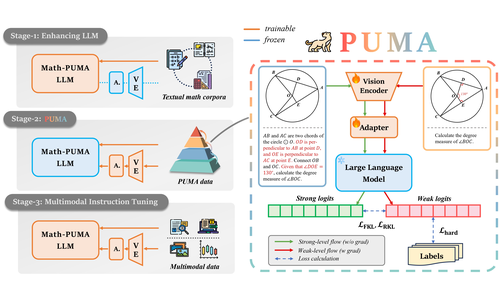

Math-PUMA: Progressive Upward Multimodal Alignment to Enhance Mathematical Reasoning

Wenwen Zhuang*, Xin Huang*, Xiantao Zhang*, Jin Zeng

- This paper introduces Math-PUMA, a novel training methodology that uses Progressive Upward Multimodal Alignment to significantly narrow the performance gap between textual and visual modalities in mathematical reasoning , achieving state-of-the-art results among open-source MLLMs.

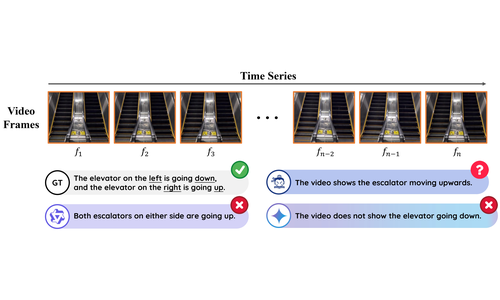

The Escalator Problem: Identifying Implicit Motion Blindness in AI for Accessibility

Xiantao Zhang

- This paper identifies “Implicit Motion Blindness” in MLLMs, exemplified by their failure to determine an escalator’s direction , and argues this flaw—caused by the prevalent frame-sampling paradigm —necessitates a paradigm shift from semantic recognition to robust physical perception to build trustworthy assistive AI for the visually impaired.

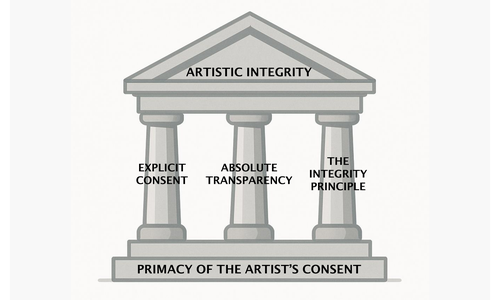

Xiantao Zhang

- This paper proposes an ethical framework for posthumous AI art, demanding the artist’s explicit consent, mandatory transparency, and an integrity rule that forbids AI from fabricating new works where no verifiable intent exists.

📖 Educations

- 2021.09 - 2025.06, B.S. in Computer Science, School of Computer Science and Engineering (SCSE), Beihang University.

🎖 Honors and Awards

- 2025.06, Outstanding Bachelor Thesis, School of Computer Science and Engineering (SCSE), Beihang University.

💻 Internships

- 2025.09 - Present, Seed, ByteDance.

- 2024.07 - 2024.09, Gauth, ByteDance.

📝 Academic Service

Conferences:

- Program Committee Member, AAAI 2026

- Reviewer, EMNLP 2025 (ARR May. cycle)

- Reviewer, ACM Multimedia 2025

- Reviewer, ACL 2025 (ARR Feb. cycle) — Received 3 Great Review distinctions

Workshops:

- Reviewer, NeurIPS 2025 Workshop on MATH-AI

- Reviewer, NeurIPS 2025 Workshop on COML

- Reviewer, ICML 2025 Workshop on AIW

- Reviewer, COLM 2025 Workshop on INTERPLAY

🚀 Open Source Projects

-

LLM-from-scratch

: A series of hands-on reproductions and technical deep-dives, including pretraining LLaMA-3 from scratch, implementing LoRA from scratch, in-depth analyses of the Qwen Series technical reports, and explorations of asynchronous concurrency in LLMs, and more.

-

Maxs-Awesome-Datasets

: A diverse set of open-source datasets I have released, with a particular emphasis on Chinese datasets across various domains and topics, among others.

✍️ Blogs

Friendly reminder: the following blog posts are written in Chinese.

-

How Qwen3 Achieves Hybrid Thinking (Fast and Slow Thinking)? – 200+ likes, 300+ bookmarks

-

LoRA from Scratch – 200+ likes, 400+ bookmarks

-

Pretraining a Miniature LLaMA-3 from Scratch – 150+ likes, 400+ bookmarks

Read more on my Zhihu Homepage.